Katie McIntyre

No-Code Generative AI Application Builder

Challenge

Democratize AI by breaking down the complexities of generative artificial intelligence (GenAI), empowering less experienced users to craft custom GenAI applications trained on personal data without knowledge of coding.

Team

Ridhima Gupta @ Databricks

Ashley Frith, Benedicte Knudson, Margot Lin, and Hang Wang @ Georgia Tech

Solution

An intuitive, user-friendly platform enabling straightforward and efficient creation and deployment of customized GenAI-powered tools for diverse applications through step-by-step basic mode and a node-based sandbox view.

Role

Lead UX Researcher

Project Manager

Process

Orienting to the Problem Space

Generative Research

Findings and Communication

Iterative Design and Feedback

Evaluative Research

-

stakeholder meetings

-

background research

-

literature and social media review

-

competitive analysis

-

semi-structured interviews

-

contextual inquiry

-

survey

-

qualitative coding

-

affinity mapping

-

quantitative data visualization

-

discussion of research findings

-

user journey map

-

personas

-

user needs and design implications

-

ideation, sketches, and wireframes

-

high-fidelity prototype

-

feedback sessions

-

usability testing

-

cognitive walkthrough

Problem Space

Methods

To kick off our project, we defined our initial research strategy and objectives. Since none of our group members had extensive experience or technical knowledge related to artificial intelligence, we needed to quickly dive in and expose ourselves to the world of GenAI. We identified research objectives and rapid research methods to orient to the problem space.

Findings

Ideal Problem Space

Our initial research findings clearly highlighted the necessity for an GenAI application builder intentionally designed for maximizing flexible customizability to meet user needs for diverse use cases, simplifying complex technical processes for non-technical users, promoting education and AI literacy by connecting users to learning resources, and employing a user-centric design using visual displays of information, drag-and-drop features, and step-by-step interactive guidance.

Background

Versatility of GenAI across applications and contexts (Sallam, 2023)

Widespread interest, limited understanding (Seger et al., 2023)

Inaccessibility and inadequate tools for novices (Nah et al., 2023)

Stakeholders

Users

Novice and expert users displayed differing, often conflicting needs, goals, and preferences within our problem space. To solidify research and design objectives, we identified novice users as our primary group of interest, with expert users' needs considered secondary to novices' needs.

Existing Products

We completed a competitive analysis of products on the market in order to identify commonly valued functionalities as well as gaps in the existing market.

Problem Statement

How might we support less-experienced users in crafting custom GenAI applications

using their own data?

Generative Research

Research Questions

Grounded in our orientation to the problem space, we developed the following research questions.

.jpg)

Next, we developed an in-depth table of questions to answer through our generative research methods and chose methods based on their fit to our information needs (excerpt below).

Semi-Structured Interviews

We conducted five semi-structured interviews with experts in the field of GenAI application-building. These experts included startup founders, academics, and UX research specialists. By interviewing experts, we gained a comprehensive view of the overarching user journey, users' learning curve from novice to expert, and insights from people intimately familiar with our target population.

Method Justification

Semi-structured interviews with experts allowed us to conduct a flexible exploration of valuable industry insights, collecting deep qualitative insights and big-picture views on the problem space and target population.

Information Goals

-

Capture expert narratives

-

Understand user dynamics (backgrounds, motivations, challenges, and values)

-

Explore GenAI use cases

-

Gauge customization needs

Data + Analysis

We utilized qualitative coding and thematic analysis to derive large takeaway themes from interview transcripts.

Contextual Inquiry

We conducted five contextual inquiries with novice users, observing the novices as they built their own GenAI applications using a previously existing tool and probing them to think aloud throughout the process. By observing and interviewing novices, we were able to understand novices' true behaviors, actions, and thought processes in a realistic setting, task, and context.

Method Justification

Contextual inquiries with novice users allowed us to directly observe user actions and reactions, probing users for their thought processes and collecting concrete qualitative observational data related to novice experiences, opinions, and user flow.

Information Goals

-

Document novice user flow

-

Observe GenAI application-building challenges for novice users

-

Focus on user feedback for chatbot creation, configuration, testing, deployment, and integration

Data + Analysis

We utilized qualitative coding and affinity mapping to derive large takeaway themes from observational data and contextual inquiry transcripts.

Survey

We received survey responses from 22 users of various experience levels and types of interest in GenAI. By asking quantifiable questions to a larger sample of users, we were able to gauge user group needs and preferences and back our qualitative findings with quantitative support.

Method Justification

Surveys offered us a broad lens on user perceptions, self-reported behaviors, and preferences across diverse demographics. Using a survey allowed us to gather quantifiable data from a large audience and identify patterns, trends, and areas for improvement.

Information Goals

-

Identifying demographics of the target population (roles, levels of experience)

-

Discovering use cases

-

Quantifying importance of various features

-

Broader scope of user needs, preferences, challenges, and ideas

Data + Analysis

We presented the survey data in graphs, charts, and lists. We applied the data via triangulation to validate and extend qualitative findings from other methods.

Findings and Communication

Discussion of Research Findings

After collecting and presenting data from each method, we triangulated the data from our various methods to synthesize and discuss key findings. When certain themes reappeared across multiple methods, we took note and compiled a summary sorted by key research question.

User Journey Map

Based on our research findings, we created a user journey map to visualize the big picture of our users' experiences. We generated this research artifact to represent a physical manifestation of the user flow, as well as to highlight opportunities, facilitate the identification of pain points, and encourage ongoing empathy for the user throughout the design process.

Personas

In order to ground our design process in evidence-based, user-centric insights, we developed two user personas with the aim of enhancing cross-functional communication, focus in design, and informed decision-making. Sarah and Alex respectively represent individuals within our primary user group (novices) and secondary user group (experts).

User Needs and Design Implications

Informed by our generative user research findings, we consolidated user needs into a list. To begin orienting to the design process, we translated the user needs into specific design implications. Through our design implications, we distilled actionable insights from research findings, bridging the gap between research outcomes and practical design decisions.

.png)

Iterative Design and Feedback

Ideation, Sketches, and Wireframes

To begin the ideation process we created a table of functionality examples in existing products, moodboards using the SCAMPER method for unique idea generation, and a tentative outline of our user flow. Visualizing the user flow, we created sketches, low-fidelity wireframes, and high-fidelity wireframes while gathering feedback from novice users and industry experts between each design step to optimize our iterations.

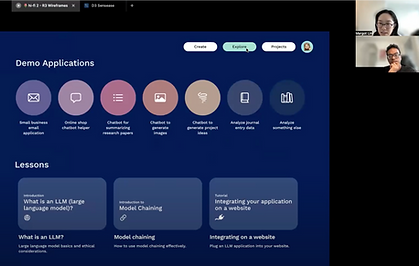

Prototype Functionality

We designed a high-fidelity, interactive prototype that empowers non-technical users to build custom GenAI applications using their own data. The prototype promotes education and AI literacy though an informative "Explore" section, maximizes flexible customizability with a step-by-step basic "Create" mode and a visual sandbox view for more experienced users, and employs user-centric design by providing a straightforward, clear interface.

User Flow Diagram

The user flow diagram below illustrates the user's experience of navigating our prototype to begin creating GenAI applications.

.png)

GenAI Application Creation Process

In order to create GenAI applications in our high-fidelity prototype, users complete the following steps.

.png)

Prototype Features

Our high-fidelity, interactive prototype addressed all of our identified user needs and design implications. Derived from our high-fidelity wireframes, here are visual explanations of how our prototype implements each design implication.

High-Fidelity Prototype

Explore our interface and walk through the process of building your own GenAI application!

Evaluative Research

Usability Testing

We conducted task-based usability testing with four novice users. Users completed three tasks- finding inspiration for potential GenAI applications, creating an application in basic mode, and creating an application in advanced mode. We instructed users to think aloud during the usability testing to elicit deep qualitative data and collected concrete quantitative data from a post-test survey. By conducting usability tests with novice users, we directly observed and quantified prototype effectiveness. We also identified gaps and shortcomings in our prototype to address in future iterations.

.png)

Method Justification

Usability testing enabled us to systematically evaluate the product by observing real users as they interact with it, thereby identifying usability issues and gathering actionable insights to improve user experience and satisfaction.

Information Goals

-

Quantify alignment with user needs

-

Assess compliance with design requirements

-

Explore and identify accessibility concerns

-

Evaluate effectiveness and efficiency in core functions

Data + Analysis

We used qualitative coding and thematic analysis to derive large takeaway themes from usability testing transcripts. Additionally, we graphed data from post-test surveys to quantify our levels of success in meeting user needs.

We found that 100% of users were able complete each task, indicating our overall success.

Cognitive Walkthrough

In our pursuit of excellence and intuitive design, we embarked on comprehensive cognitive walkthroughs of our prototype with three domain experts, a method designed to rigorously assess the usability and user experience of our application. This process involved a curated group of experts meticulously navigating through the application, employing a think-aloud protocol to vocalize their thought process, reactions, and feedback in real-time. Central to this evaluative approach were four guiding questions tailored to each screen, designed to scrutinize the application’s design through the lens of user goals and expectations.

Method Justification

Cognitive walkthroughs allowed us to obtain highly critical, constructive feedback from industry experts. We were able to directly uncover mental models of our prototype and outline next steps for improving our product.

Information Goals

-

Evaluate learnability of prototype

-

Improve match between system functionality and user mental models

-

Understand learning curve for novices

-

Quantify compliance with design requirements

Data + Analysis

We used qualitative coding and thematic analysis of cognitive walkthrough transcripts to derive large takeaway themes and concrete, actionable next steps for the research and development of sandbox.ai.

.png)